The factal theory being developed can be applied in several areas to perform interesting tasks:

- more complex languages like simple "pig" English:

- HavenWant sees the purpose of language as transferring simulation networks from one person to another. (e.g. I say "a car is coming" to you, and you change how you think.) In studying Chomsky grammars in an MIT graduate class, I sketched out a Language Deserializer, that using networks of factals takes in a series of words and produces linked up factals for the simulator.

- A HaveNWant network network to learn, recognize and speak Morse Code.

- <notes from Chomsky grammar course MIT 2012?>

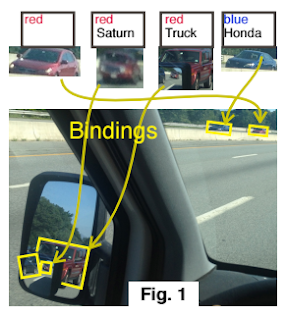

- Bindings (6 pages) are created in a network of MaxOr factals, connecting for seconds or minutes sensations of lower levels to upper level models. In the figure, various retinal areas are mapped by bindings to four models of vehicles, and each determines configuration parameters.

- Modeling in Sparse Spaces: YAAGIL from yet another perspective.

- Face Blindness -- how YAAGIL might explain this observed phenomenon.